On the deterioration of data

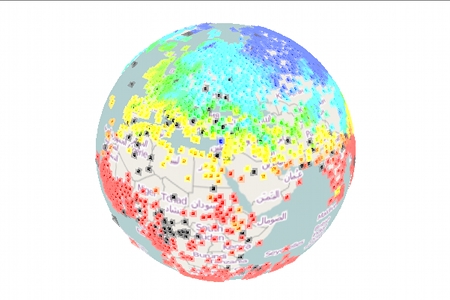

Thursday, August 21st, 2014Tim and me are currently working on a interactive browser visualization using temperature data from HADCRUT, namely the CRUTEM 4 temperature station data which we map with the help of the open source web GL earth API (which seems to be to quite some extend the work of the Czech-Swiss company Klokan technologies) onto a model of the earth (covered with open street maps).

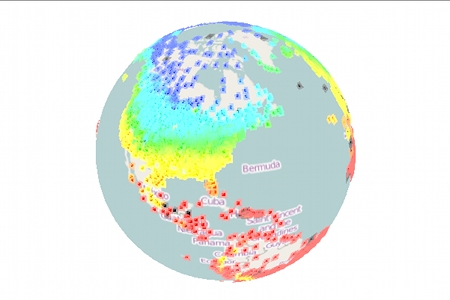

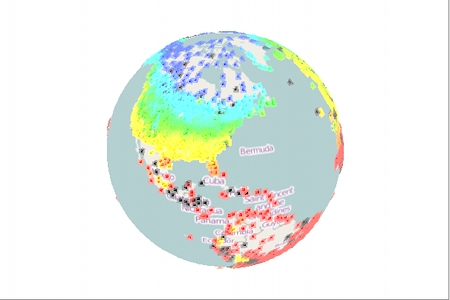

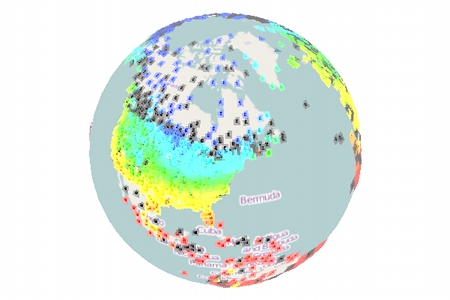

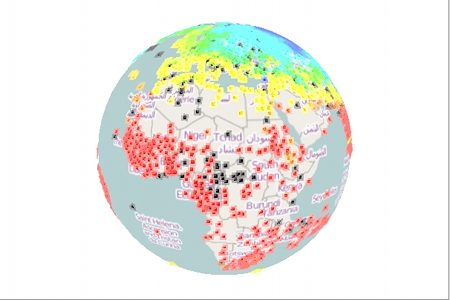

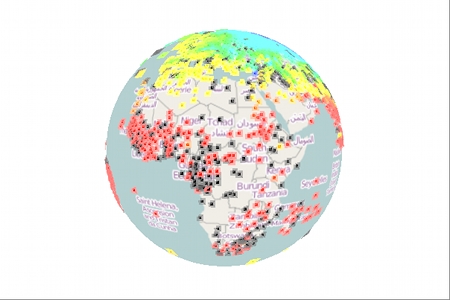

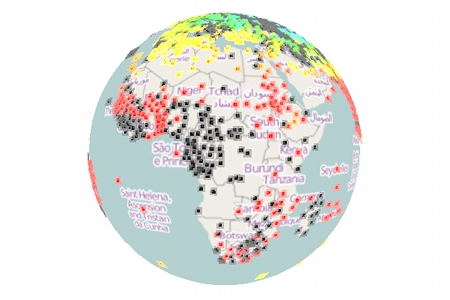

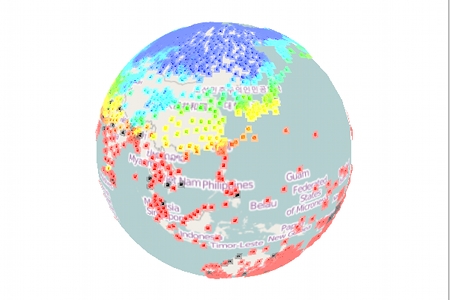

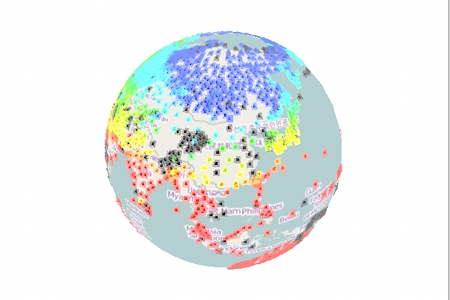

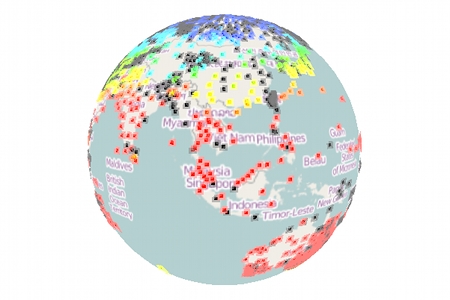

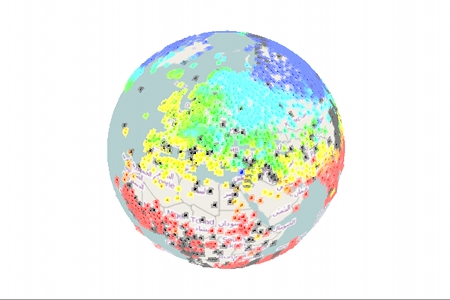

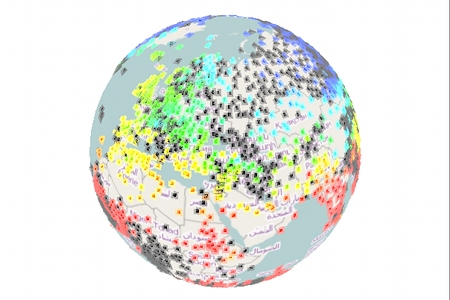

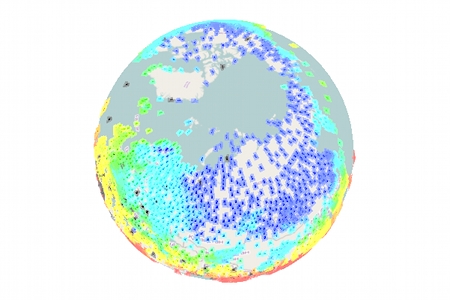

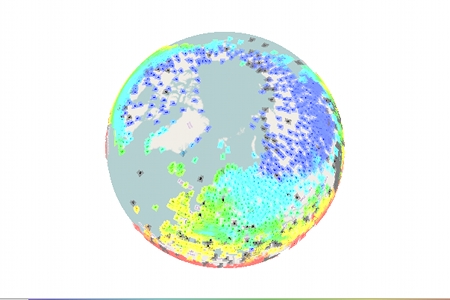

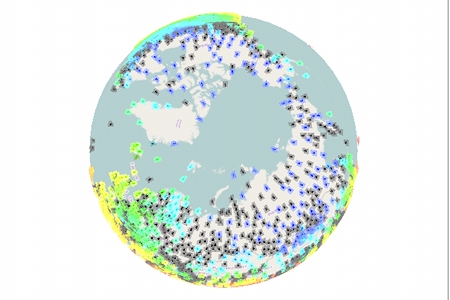

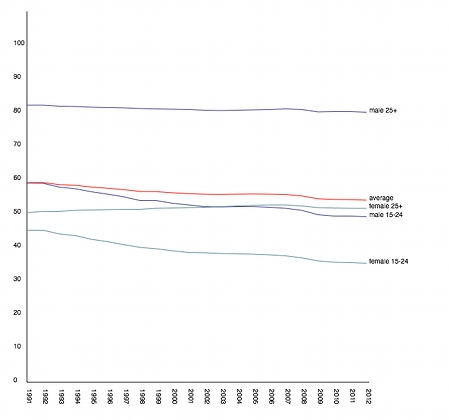

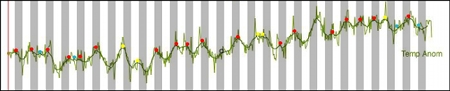

The visualization is still work in progress, but what is already visible is that the temperature data is quite deteriorating (please see also the previous randform post on the topic of deteriorization of data). Where it looks as if the deterioration had been bigger in the years from 2000-2009 than in the years 1980-2000. Below you can see screenshots of various regions of the world for the month of January for the years 1980, 2000 and 2009. The color of a rectangle indicates the (monthly) temperature value for the respective station (the station is represented by a rectangle around its coordinates) which is encoded with the usual hue encoding (blue is cold, red is hot). Black rectangles are invalid data. The CRUTEM 4 data file contains the data of 4634 stations. Mapping all the station data makes the visualization very slow, especially for scaling, therefore the slightly different scalings/views for each region and the fact that screenshots are on display. The interactive application will probably be not for all stations at once.

Africa:

Eurasia/Northern Africa:

Jan 1980

update June 15, 2019:

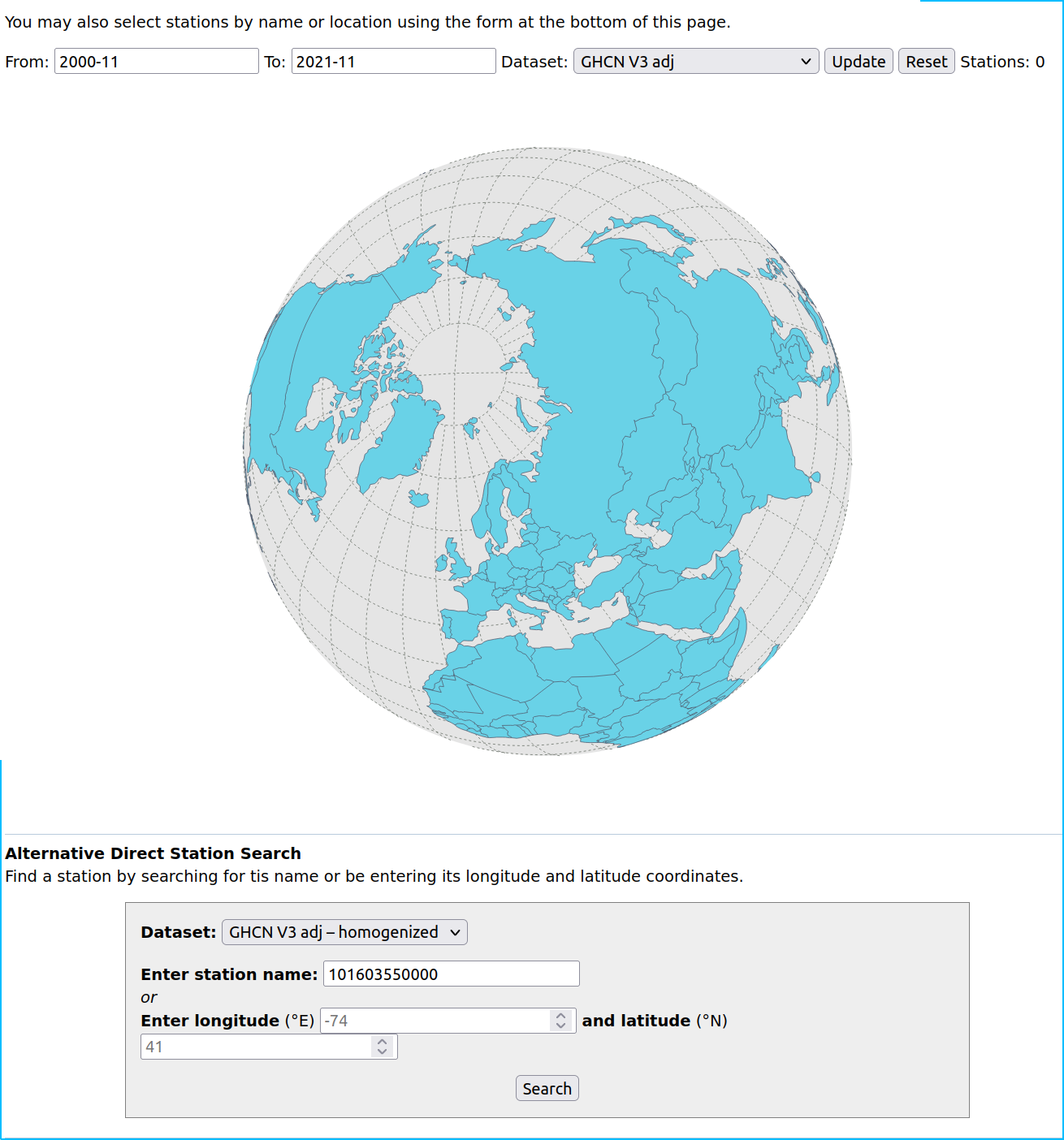

There is now a similar interactive visualization at NASA for the GHCN data set at https://data.giss.nasa.gov/gistemp/stdata/

update December 29, 2021:

The link to our interactive temperature visualization, which was announced above in the post is here, the corresponding post about it is here.

The GHCN page linked on June 15, 2019 doesn’t show any station data anymore:

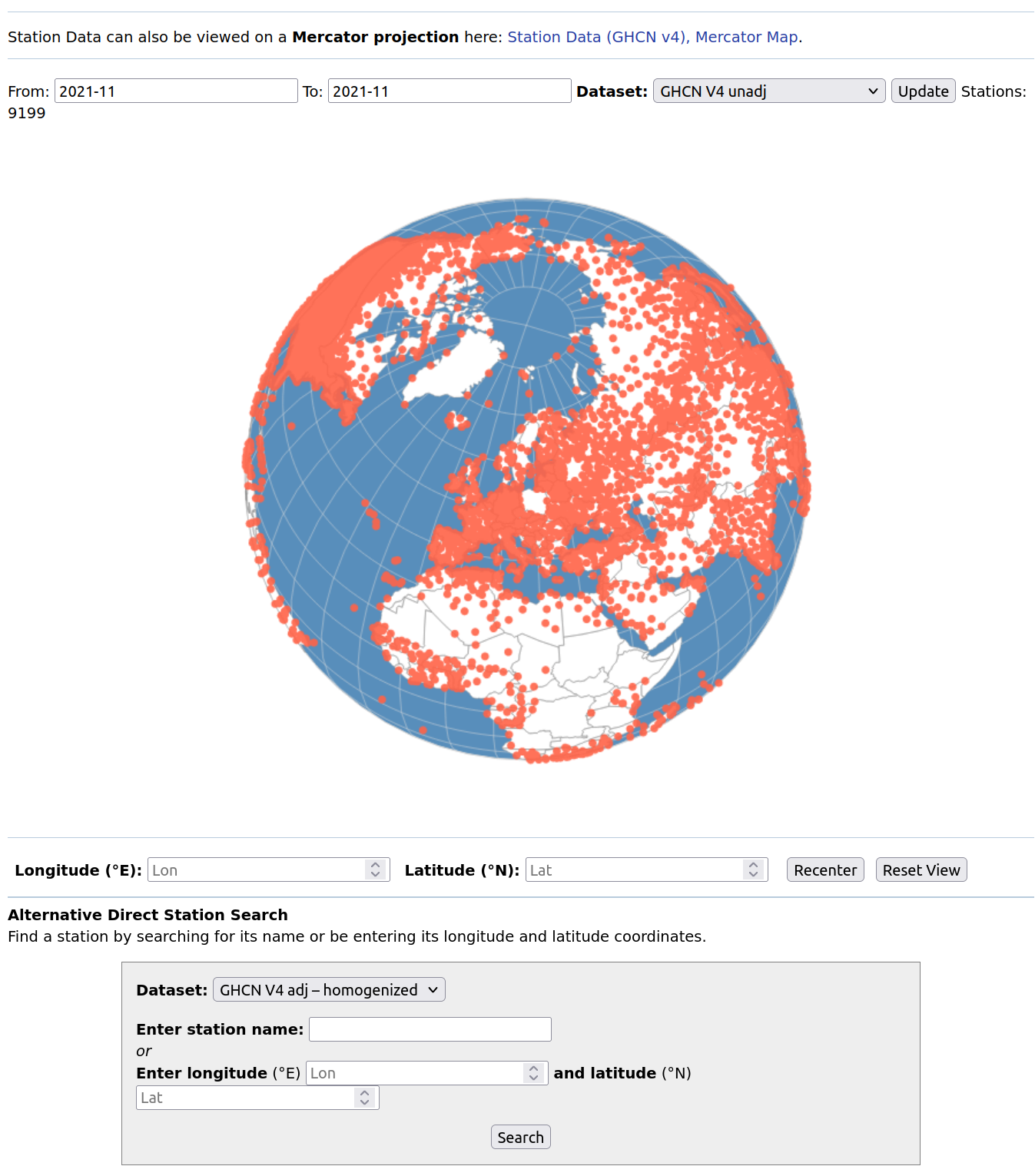

There is currently a new page with a “version 4 dataset” here. This is how it currently looks like:

Apriori this looks as if most of the stations are “back on track” but then I couldn’t find out within a decent time how much of this data is “reconstructed” in the sense of “educated guess” and how much is actual data from the respective stations. Another thing that caught my attention was that the content of the tooltipmenu of each station is partially non accessible, I don’t remember whether this is a new phenomenon.